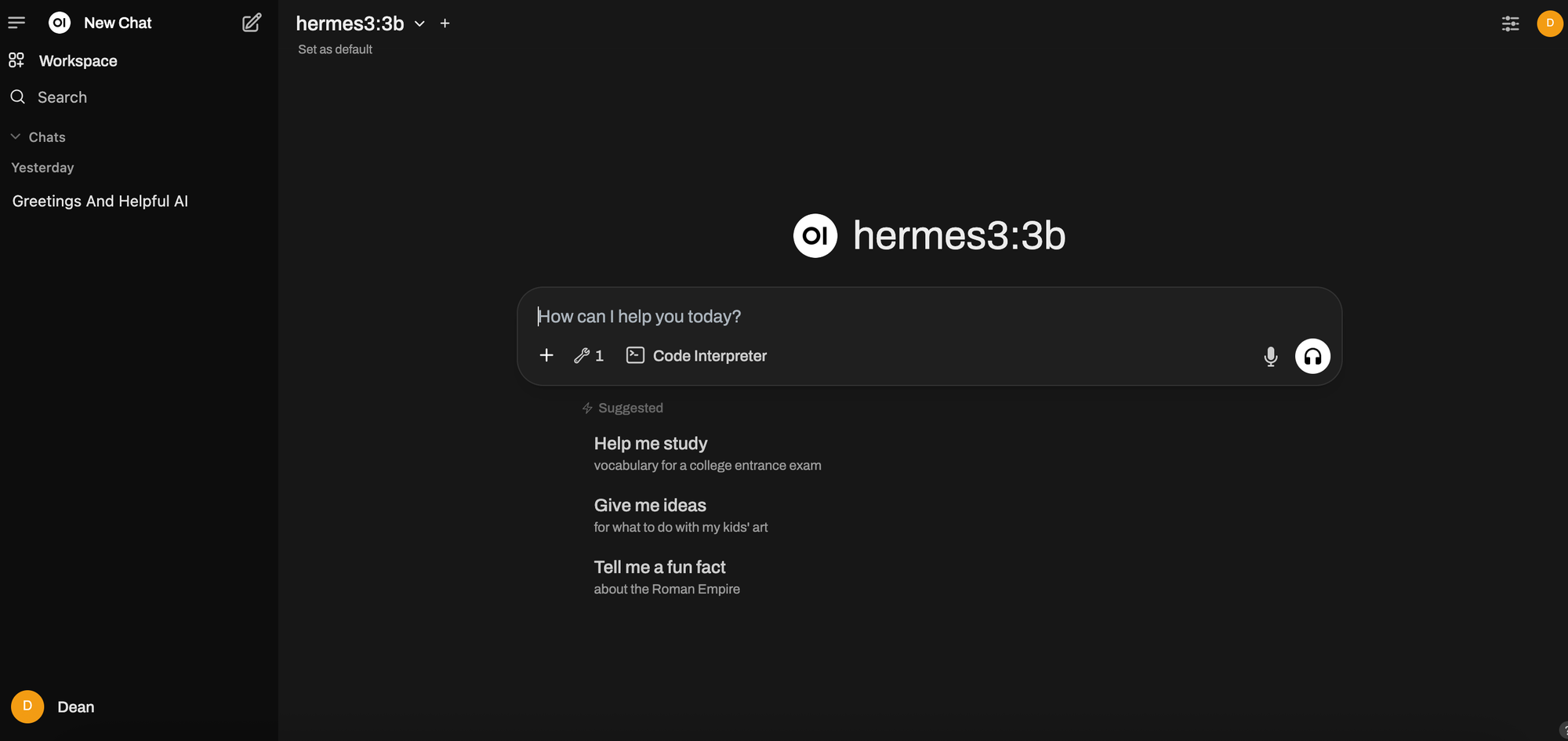

Setting Up Open WebUI + Ollama on CPU

A streamlined setup for local LLM interaction via a web interface.

Why We Did It 🖥️

After experimenting with local model serving, we needed a lightweight, fast web interface to interact with LLMs running directly on CPU — no GPU acceleration.

The goals were:

- Keep it simple

- Use existing hardware (Blackbox 🖤)

- Make models available via browser

- Minimize memory and disk impact

Software Stack

- Ollama (as the LLM backend)

- Open WebUI (for a slick chat frontend)

- Docker Compose (to manage the services)

Key Steps

1. Install Docker and Docker Compose

sudo apt update

sudo apt install docker docker-compose

(Already done on Blackbox during initial setup.)

2. Set Up Ollama in Docker

Created a directory for Ollama data:

mkdir -p ~/ollama-data

Pulled and ran the container:

docker run -d \

--name ollama \

--restart unless-stopped \

-p 11434:11434 \

-v ~/ollama-data:/root/.ollama \

ollama/ollama

✅ Now Ollama listens on localhost:11434.

3. Set Up Open WebUI

Created another directory:

mkdir -p ~/open-webui-data

Launched WebUI:

docker run -d \

--name open-webui \

--restart unless-stopped \

-p 3000:8080 \

-v ~/open-webui-data:/app/backend/data \

-e 'OLLAMA_API_BASE_URL=http://host.docker.internal:11434' \

ghcr.io/open-webui/open-webui:main

Note: On Linux, host.docker.internal can sometimes misbehave. If needed, manually set it to your machine's IP.Environment Settings

Here’s what we set inside Open WebUI (auto-populated after startup):

| Variable | Value |

|---|---|

| OLLAMA_API_BASE_URL | http://host.docker.internal:11434 |

| PORT | 8080 |

| DATABASE URL (optional) | Used SQLite internally |

No changes needed — out of the box 🎉.

Model Management

Inside Open WebUI:

- Download models (e.g., Hermes 3:3B, Mistral Instruct) using the UI

- Select model from dropdown

- Customize prompts and system behavior

Final Result

- Access Open WebUI at http://blackbox:3000

- Smooth interaction with local models on CPU

- Persistent chats and model settings

- Minimal RAM/CPU usage, ideal for our ThinkCentre M710q

Thoughts 💭

This setup is perfect for casual local AI exploration, blogging, research, and private LLM use — all without needing cloud access or massive GPU power.

📸 Screenshot