War by Algorithm: How AI Moved From Safety Promises to Military Contracts

Inspired by The Verge “Decoder” podcast episode How AI safety took a backseat to military money

Artificial intelligence is no longer confined to research labs, chatbots, and corporate productivity tools. Increasingly, it is finding its way into the defense industry. Military applications of AI are not hypothetical, they are already being tested and in some cases deployed.

The Verge’s Decoder podcast recently explored this transformation, focusing on how companies like OpenAI and Anthropic, once defined by strong safety commitments, are now securing major defense contracts. What emerges is a story about money, influence, and the difficult tradeoffs between innovation, ethics, and global security.

This is not only a story about technology. It is about politics, law, and human judgment. It is about the institutions that decide how far and how fast AI can move into the realm of war.

From Safety-First Posture to Defense Partnerships

For years, AI research companies emphasised safety. OpenAI’s original mission was to ensure artificial general intelligence benefited all of humanity. Anthropic built its brand on careful model alignment and principled caution.

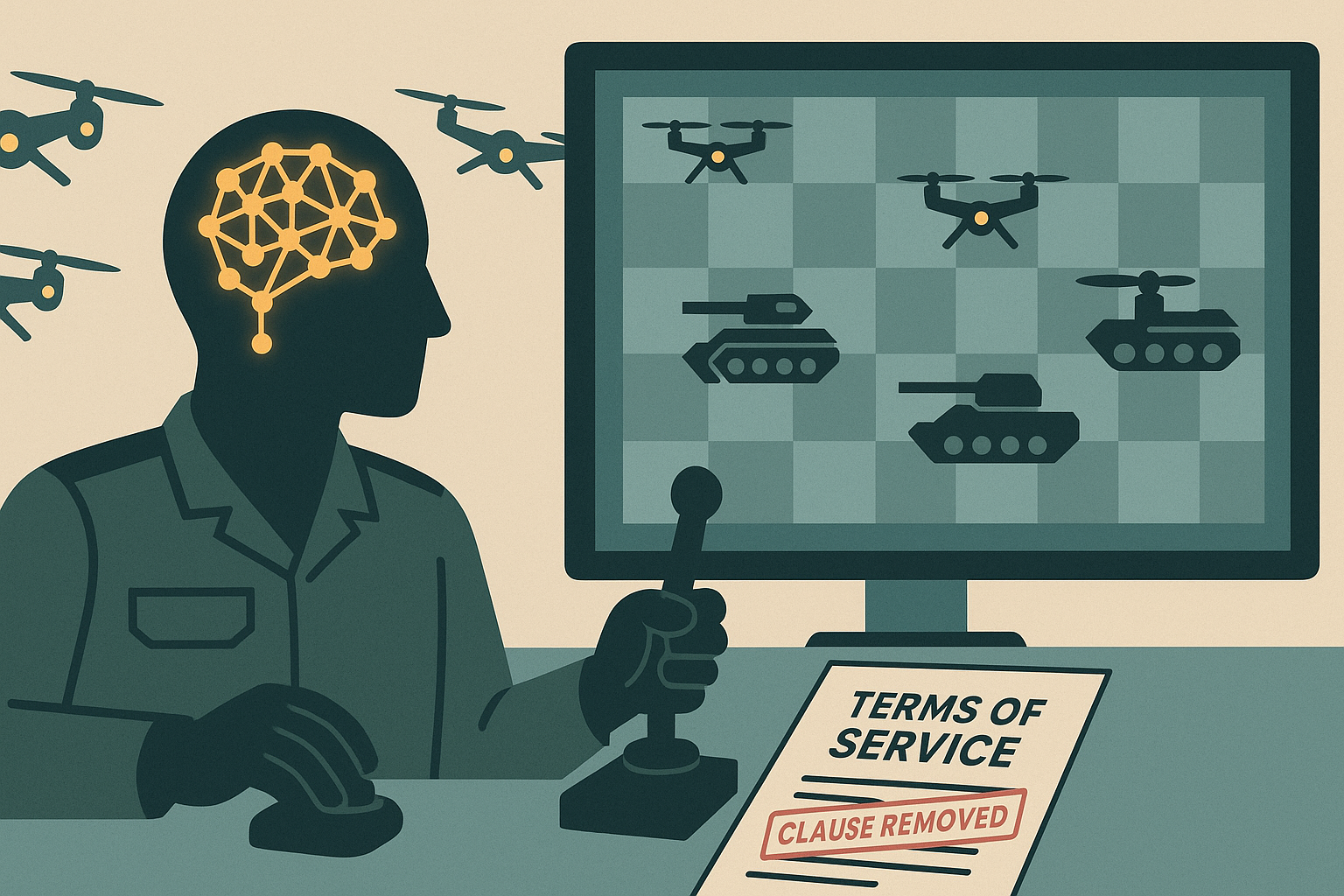

That language has softened. In 2024 OpenAI quietly removed a long-standing ban on “military and warfare” applications from its terms of service. Soon after, it landed a $200 million contract with the United States Department of Defense and announced a partnership with defense technology company Anduril.

Anthropic followed a similar path, partnering with Palantir and securing its own $200 million Department of Defense contract. Once committed to a “safety-first” identity, the company now offers its Claude models for intelligence and military applications.

These developments show how quickly money and influence can reshape the posture of organisations that originally positioned themselves against weaponisation. Safety has not disappeared from the conversation, but it no longer defines the boundaries of what these companies will do.

Why Militaries Want AI

The attraction is obvious. In an era of rapid digital warfare, information overload, and geopolitical instability, AI looks like a powerful asset.

- Speed of decision making: AI can process sensor data, satellite imagery, communications, and intelligence reports in seconds. Commanders who once waited hours for analysis can act in near real time.

- Autonomous systems: Unmanned drones and vehicles equipped with AI reduce risk to human soldiers while extending surveillance and strike capabilities.

- Predictive analytics: Algorithms can detect patterns in enemy movements or logistics that humans might miss.

- Operational efficiency: Everything from supply chain management to maintenance scheduling can be optimised with AI.

In theory, AI can compress the decision cycle, increase precision, and reduce human error. But as the Decoder episode highlights, these advantages come bundled with ethical and practical dangers that are not easy to untangle.

The Dangers of Delegating War to Algorithms

Loss of Human Judgment

The most immediate fear is simple: lethal decisions could be delegated to machines. Human oversight is still the official standard, but in practice the “human in the loop” often becomes a rubber stamp. In high-speed engagements, the person can be reduced to a formality, with little time to meaningfully question the algorithm’s recommendation.

Black Box Decisions

Modern AI models are notoriously opaque. They operate as high-dimensional systems that resist simple explanation. Once embedded in classified military systems, oversight becomes even more difficult. If a model misclassifies a civilian vehicle as a hostile target, who is accountable?

Escalation Pressure

When rival nations fear falling behind, they may deploy systems that are not fully tested. A premature rollout of AI weapons could create miscalculations or accidents that spiral into broader conflict. The arms race logic pushes risk-taking, not restraint.

Bias in the Data

Training data carries biases and blind spots. A flawed dataset can make an AI system more likely to misidentify certain groups or environments. In civilian contexts that is harmful. On a battlefield it can be catastrophic.

Proliferation and Leakage

Once a military AI system exists, it is only a matter of time before elements of it leak, are reverse-engineered, or proliferate. Non-state actors and rogue regimes may acquire powerful autonomous tools without any commitment to restraint.

A New Military-Industrial-AI Complex

The traditional military-industrial complex is already vast. Adding AI labs to the network of defense contractors, intelligence agencies, and government departments creates a new hybrid structure.

The Verge reporting shows how blurred the lines have become. Companies that present themselves as civilian technology leaders are also defense partners. A public-facing AI chatbot can coexist with classified contracts in surveillance or weapons.

This blurring matters because it makes oversight harder. Civilian branding shields companies from scrutiny, while defense secrecy hides details from the public.

Safety Principles Under Strain

The shift toward defense raises urgent questions about whether long-held principles can survive in practice.

- Safety and alignment first: What happens to safety when timelines are accelerated to meet contract deliverables?

- Human in the loop: Can humans meaningfully intervene when latency is measured in milliseconds?

- Transparency and accountability: How can independent oversight function when projects are classified?

- Global treaties: International agreements lag far behind technological developments.

- Democratic control: When decisions are hidden in corporate contracts and classified briefings, how can citizens have a say?

The answers are not encouraging. Each principle is under pressure, and the incentives point toward faster deployment, not slower deliberation.

What Oversight Could Look Like

If the world is going to embrace AI in military contexts, new guardrails are urgently required. A few proposals emerge from both experts and ethicists:

- Clear red lines: Fully autonomous lethal systems should be banned. Humans must retain genuine control over kill decisions.

- Rigorous testing: AI systems should be subject to independent verification and stress testing before deployment.

- Explainability by design: Military AI must be designed for interpretability from the start. Every decision should leave an audit trail.

- International treaties: New arms-control agreements are needed to govern AI weapons, similar to chemical or nuclear treaties.

- Civilian oversight: Parliamentary and congressional committees must have visibility, even on classified programs.

- Focus on augmentation, not substitution: AI should assist logistics and intelligence, not replace human moral judgment.

These measures will not eliminate risk, but they can mitigate the most dangerous possibilities.

The Global Landscape

This is not just about the United States. China, Russia, Israel, and many European nations are investing heavily in military AI. Some of these governments have even fewer constraints on ethical use than the United States or its allies.

If democratic societies do not lead with principles, authoritarian ones will set the terms instead. The danger is not only escalation but also the normalisation of algorithmic war as a standard practice.

Why It Matters Beyond Defense

The military is often a proving ground for technologies that later spill into civilian life. The internet, GPS, and drones all began as military projects. If AI is first scaled and stress-tested in war, the lessons it carries into civilian use may reflect the values of secrecy, speed, and lethality rather than transparency and trust.

For researchers, educators, and technologists, this should be alarming. The values embedded in early deployments often echo for decades.

A Call for Civil Engagement

The Verge episode makes a critical point: these are not only technical issues for engineers or generals. They are democratic issues. Citizens, lawmakers, and institutions must demand oversight and participate in shaping policy.

Silence will not slow down the contracts. But public pressure, legislation, and international advocacy can at least set boundaries.

As technologists, educators, or ordinary citizens, the question is whether we will accept AI as just another weapon or whether we will insist on a more restrained vision.

Conclusion

The shift from “safety first” to “defense partner” in the AI industry is not a side story. It is one of the central developments of our era. Companies that promised restraint are now receiving hundreds of millions of dollars to develop systems for war.

The moral stakes are profound. Once lethal decisions are delegated to machines, the meaning of human agency in war begins to erode. Once secrecy dominates, democratic oversight withers. Once arms races take hold, restraint becomes almost impossible.

AI can and should play a role in defense, but only under careful conditions. If those conditions collapse, we risk building a world where wars are fought at machine speed and human judgment is an afterthought.

The future of war is being negotiated in contracts, algorithms, and classified briefings right now. Citizens need to be part of that negotiation too.

References and Further Reading

This piece draws on the reporting and analysis from The Verge Decoder podcast: How AI safety took a backseat to military money.

Additional links from the episode’s resource list:

- OpenAI is softening its stance on military use

- OpenAI awarded $200 million US defense contract

- OpenAI partners with defense tech company Anduril

- Anthropic launches Claude service for military and intelligence use

- Anthropic, Palantir, Amazon team up on defense AI

- Google scraps promise not to develop AI weapons

- Microsoft employees occupy headquarters in protest of Israel contracts

- Microsoft’s employee protests have reached a boiling point