The Bitter Lesson, Revisited: Richard Sutton and the Limits of Human Design

Summary

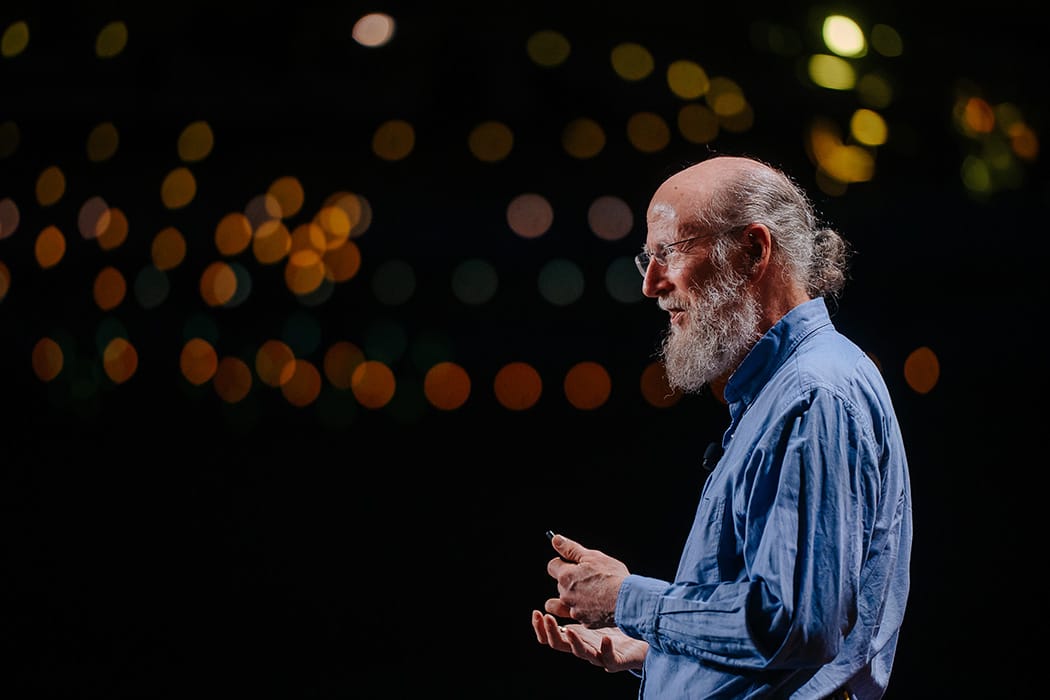

In his conversation with Dwarkesh Patel, Richard Sutton, one of the founding figures of modern reinforcement learning, revisits the core argument that has defined his career: that intelligence, whether human or artificial, emerges not from clever human design but from simple algorithms coupled with vast computation and experience. The episode is a masterclass in humility before scale and a reminder that our role as designers may be far smaller than we would like to believe.

You can read the full transcript and listen to the episode here.

The Architect Who Gave Up Architecture

Richard Sutton is not just another AI researcher with bold ideas. He is one of the people who built the field itself. His work on temporal-difference learning laid the foundation for reinforcement learning, the same branch of AI that underpins everything from AlphaGo to modern robotics and game-playing agents. He co-authored Reinforcement Learning: An Introduction, a text that sits on nearly every AI researcher’s shelf.

But Sutton’s fame rests most of all on a short 2019 essay titled The Bitter Lesson. In it, he argued that the biggest breakthroughs in AI have not come from building more sophisticated domain-specific models, but from allowing machines to learn directly from computation and data. Every time humans try to out-engineer learning by hand-crafting features, rules, or architectures, the lesson has been “bitter”: general learning methods powered by scale inevitably win.

The Lesson That Refuses to Go Away

Listening to the Dwarkesh Patel interview feels like revisiting that essay in long form, but with more philosophical depth. Sutton does not just defend his thesis, he extends it. He describes intelligence as an inevitable outcome of interaction between a general learner and a rich environment.

In his view, the story of AI is not a story of human ingenuity but of discovery: discovering that the universe itself is structured in a way that rewards general-purpose learning. Every domain, whether language, vision, control, or strategy, ultimately yields to the same principle: compute + data + time.

He speaks almost like a naturalist observing evolution. Just as the human brain arose from billions of iterations of trial and error, so too do large language models arise from computational evolution. The success of systems like GPT-5, Gemini, or Claude is not the triumph of design but of persistence, the willingness to let a general learner interact with the world long enough to stumble into understanding.

The Collapse of “Understanding”

Sutton’s framing raises uncomfortable questions. If intelligence can emerge from scale and computation, what does that make of “understanding”?

When we marvel at the fluency of GPT-5 or the reasoning of DeepMind’s AlphaZero, we often seek an inner model, some human-like comprehension hiding beneath the surface. Sutton resists that impulse. He suggests that understanding is not a property of minds but of systems in equilibrium with their environments. Intelligence is not knowing; it is doing.

That is a hard pill for educators, philosophers, and programmers alike. It erases the romance of craftsmanship. It tells us that a trillion-parameter stochastic parrot might capture more of the structure of reality than our carefully theorised lesson plans ever could.

And yet there is beauty in that surrender: the idea that learning itself, not teaching, is the fundamental act of intelligence.

Education in the Age of the Bitter Lesson

For those of us working in education, Sutton’s ideas can feel both threatening and liberating. If general learning mechanisms outperform human design, what happens to the notion of curriculum?

In classrooms, we pride ourselves on shaping understanding, sequencing ideas, anticipating misconceptions, structuring tasks so that meaning unfolds in manageable increments. But Sutton’s world is one where structure eventually loses to exposure. Given enough raw experience, the learner self-organises.

There is an uncomfortable parallel between Sutton’s AI philosophy and what happens when students use ChatGPT: they bypass structure. They consume the sum of human text without any gatekeeping and in doing so learn patterns of expression that mimic understanding. We might deride that as “cheating,” but Sutton would call it inevitable.

He is not advocating chaos in learning, but he is pointing to a deeper truth. Every system that learns, whether neural or biological, eventually finds that the rules designed for it become constraints. The path to mastery is paved with the rubble of the scaffolds that built it.

The End of Explainability

One of the most striking moments in the Patel interview comes when Sutton is asked about interpretability. Should we strive to understand the internals of these massive systems? His answer is characteristically deflating: explainability may simply not matter.

He draws an analogy to human cognition: we do not understand how our own brains work, yet we function perfectly well. The insistence on explainability might be, in Sutton’s eyes, a lingering bias, a residue of the belief that intelligence must be designed.

It is an unsettling position. It invites us to live in a world where machines think in ways forever opaque to us, and we must learn to trust outcomes rather than mechanisms. But there is a pragmatic wisdom to it. Sutton reminds us that the goal of science is prediction and control, not comfort. If a model predicts accurately, then perhaps it understands in all the ways that matter.

The Humility of Scale

What makes Sutton so compelling is not just his technical authority but his humility. He does not posture as a visionary; he sounds more like a monk of computation. His “bitter lesson” is not a boast but a confession, an admission that intelligence is not ours to engineer, only to nurture.

That humility is instructive. It suggests a new ethos for those of us experimenting with AI in education and research: to stop fighting the tide of scale and start learning from it. Instead of asking, “How can we make AI more human?”, perhaps we should ask, “What can human learning borrow from AI?”

If LLMs can absorb meaning from raw exposure, what does that say about the conditions under which our students learn best? If intelligence thrives on feedback loops, interaction, and iteration, maybe our classrooms should look less like lectures and more like training environments, spaces where curiosity replaces curriculum and computation replaces instruction.

Reflection

Sutton’s worldview sits uneasily beside traditional notions of teaching and understanding, yet it carries a strange optimism. The idea that learning is universal, that given enough data anything can learn, reframes intelligence as an emergent property of persistence rather than privilege.

As educators, that is both humbling and inspiring. It suggests that our task is not to pour knowledge into students but to build environments where their own learning algorithms can flourish.

The bitter lesson, then, is not just about machines. It is about us, and our reluctance to trust in learning itself.