AI’s Hidden Cost: The Environmental Toll of a Tokenized Future

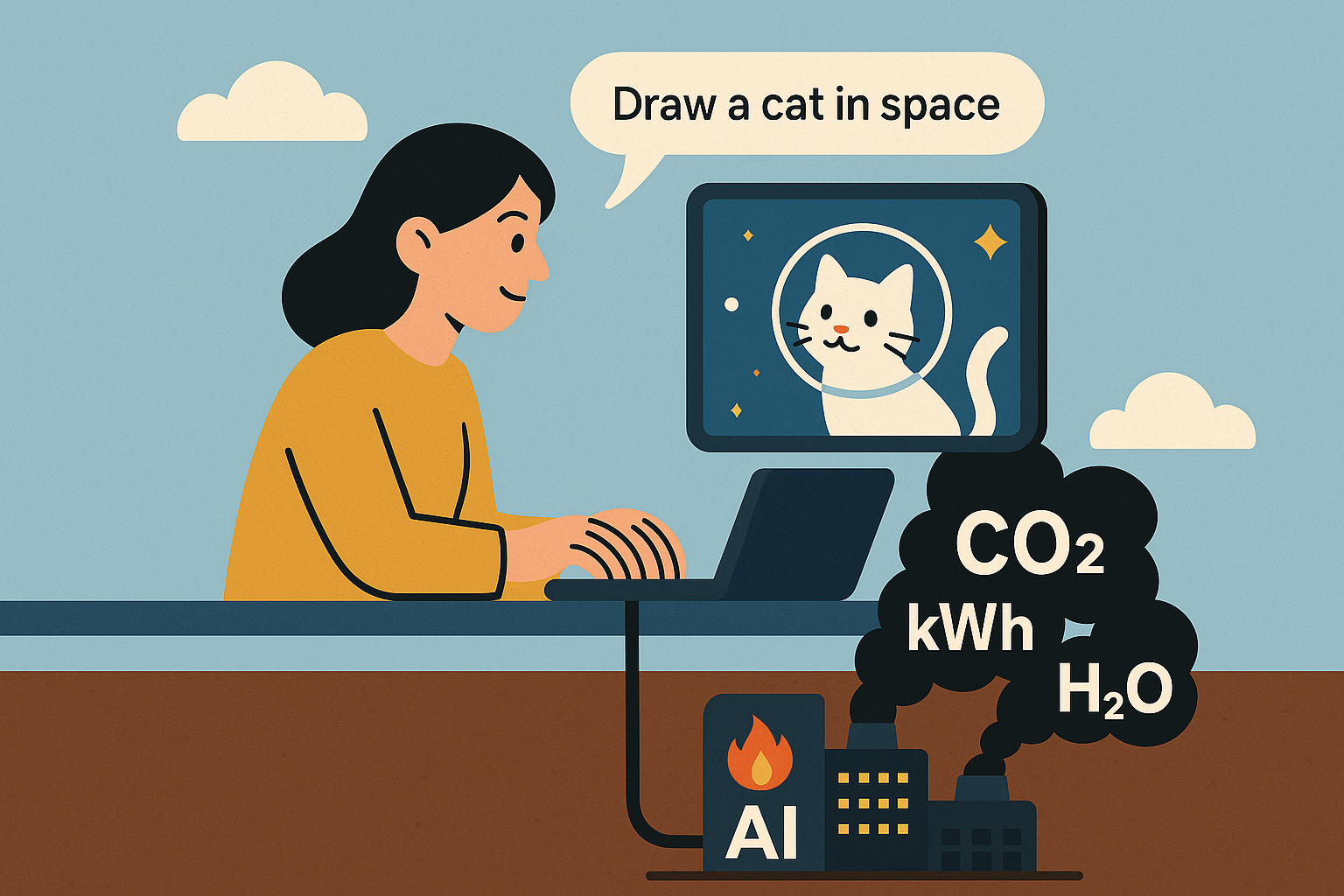

To most users, AI feels weightless. Type in a prompt, get a poem or a photo or a fake Morgan Freeman voice in return. But under the surface, the computation required for each of these tasks is vast, energy-intensive, and carbon-heavy. Especially at scale.

Take large language models (LLMs) like GPT-4, Claude, or Gemini. Every prompt and every reply is measured in tokens — tiny units of text processed by the model.

What Even Is a Token?

Tokens are chunks of words — typically 4 characters long. The sentence "I love AI and climate research" is 8 words, or about 10 tokens. On average:1,000 tokens ≈ 750 words (roughly a typed A4 page).Most AI pricing and power calculations are based on token usage.

A 2023 repository mining study analyzed 1,417 machine learning models on the Hugging Face Hub, revealing a decline in carbon emissions reporting and highlighting the dominance of NLP applications. The study also identified correlations between emissions and model attributes like size and dataset.

→ Exploring the Carbon Footprint of Hugging Face’s ML Models: A Repository Mining Study

Newer, larger models like GPT-4 and Claude 3 likely consume more energy per token, especially when run in environments with low renewable energy penetration.

Images, Audio, and Video: When Art Becomes Energy-Hungry

Generative image models like DALL·E 3, Midjourney, and Stable Diffusion XL have ballooned in popularity, but with popularity comes compute.

A 2023 study by researchers at Hugging Face and Carnegie Mellon University found that generating 1,000 images with Stable Diffusion XL results in carbon emissions equivalent to driving 4.1 miles in an average gasoline-powered car.

→ This Is How Much Power It Takes To Generate A Single AI Image

Multiply that by millions of daily image generations and the energy bill grows rapidly.

Video models like Runway Gen-2 or OpenAI’s Sora take this further. Analysts estimate a 10-second AI video at 30 fps could use between 1–2 GPU hours, or roughly 0.5–2 kWh depending on resolution.

Text-to-speech systems like ElevenLabs and OpenAI's Voice Engine also carry compute costs. Synthesizing a 10-second voice clip may involve hundreds of millions of parameter activations, especially for multilingual or voice-cloning features.

The Cloud Is Not Always Clean

Even if AI providers claim efficiency, the real-world emissions depend heavily on the source of electricity.

Most major AI systems are hosted by the big three cloud providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. While these providers often tout "100% renewable" pledges, the reality is messier:

- AWS still operates many data centers in regions powered by coal and natural gas, like parts of Virginia and Ohio.

→ Amazon’s Grid Mix Disclosure (2023) - Google claims to operate on 64% carbon-free energy in real-time globally (as of 2024), with major offsets still in play.

→ Google Cloud Sustainability - Microsoft has pledged to be carbon negative by 2030.

→ Microsoft Sustainability Commitments

For more granular data on electricity sources, check Ember Climate or Scope3 Cloud Emissions.

Put simply: just because a data center buys credits doesn’t mean your AI image was generated with solar.

Water, Too

A less-discussed impact of AI training and inference is water usage. AI models require extensive cooling, often through evaporative processes that consume clean freshwater.

A 2023 peer-reviewed study from University of California Riverside and UT Arlington estimated that training GPT-3 alone consumed 700,000 litres of clean water.

→ Study: “Making AI Less ‘Thirsty’” (Nature, 2023)

That’s enough to manufacture 370 BMWs or 320 Tesla batteries.

Inference, the act of running the model (not just training it), continues this water use daily, especially in hot data center regions.

Can AI Go Green?

Some promising developments aim to make AI less damaging:

- DeepSeek-V2, released in 2024, uses a hybrid MoE architecture with sparse routing and shows competitive performance to dense models with fewer FLOPs.

→ DeepSeek-V2: Towards Deeper and Wider Language Models - On-device AI: Running models on edge devices (like laptops or phones) uses less power and can bypass cloud emissions.

→ Apple Neural Engine Efficiency Claims - Carbon labeling: A push is growing for companies to add environmental impact scores to AI usage, like food nutrition labels.

→ Wired: “AI Needs a Carbon Label” - Regulation: The EU AI Act includes language around environmental transparency — but implementation lags.

Still, these are early steps. Without global standards or public pressure, the default path is more compute, more emissions.

We marvel at AI’s creativity, but behind every generated meme or essay lies real-world resource use: electricity from coal, clean water turned to steam, and GPUs devouring energy by the second. At the very least, users deserve transparency. We label calories on food, but not carbon on a poem. That needs to change.

In a warming world, convenience cannot keep coming at the planet’s expense.