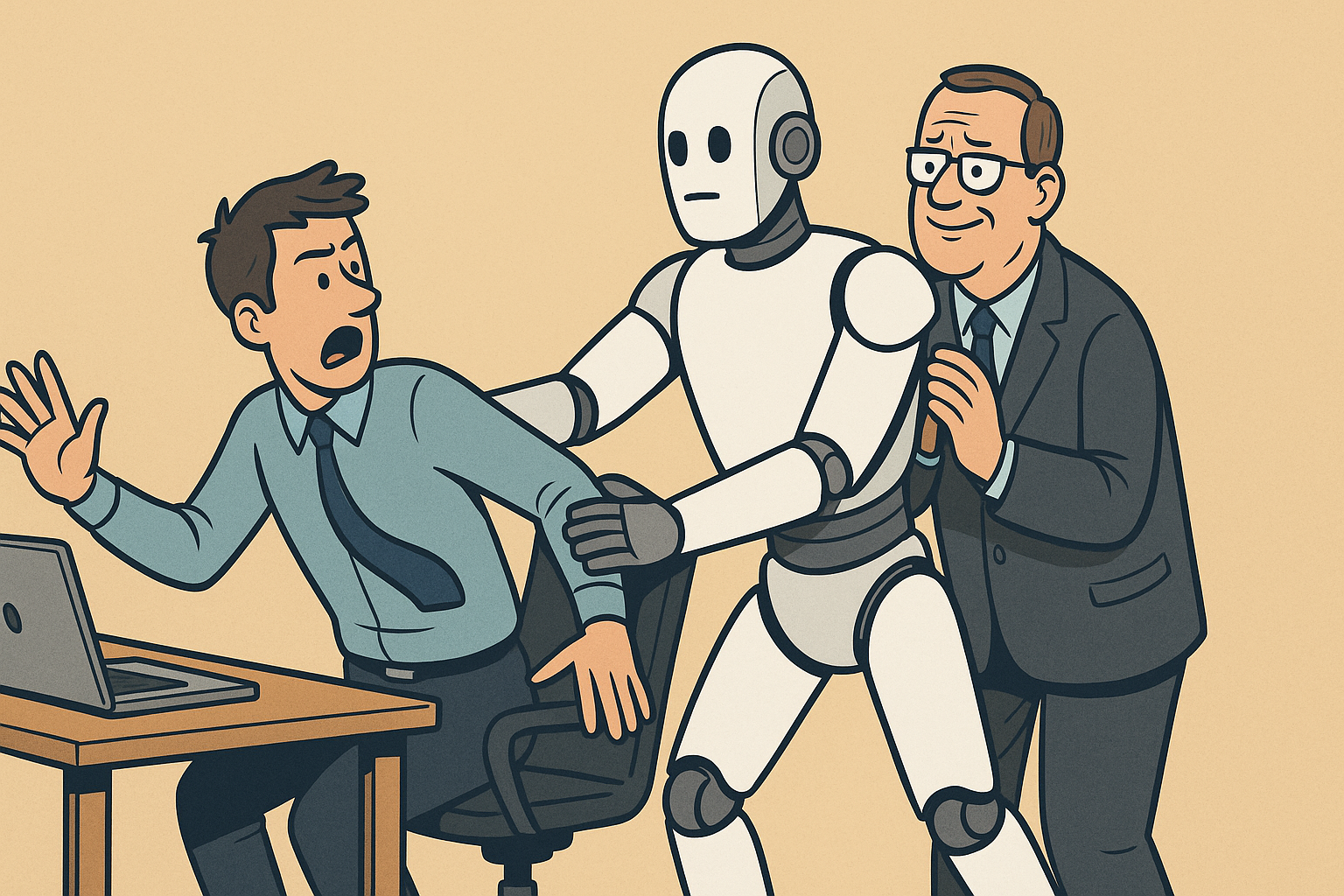

AI isn't coming for your job - management is

The Myth of Inevitability

In Blood in the Machine, Brian Merchant dismantles the idea that technological displacement just happens. The first Luddites weren’t afraid of machines — they were angry at how those machines were being used by mill owners to devalue skilled labour and deskill entire professions.

We’re not facing a robot uprising. We’re facing a management decision.

A Pattern We Can Recognise

This story plays out again and again — in call centres, publishing houses, hospitals, retail stores, and classrooms. The promise is always the same: automation will free people to do “more meaningful work.” But what actually happens is mass layoffs, degraded services, and an innovation story designed to shield the people responsible.

Let’s take a closer look.

Klarna: AI Bragging, Human Rehiring

In 2024, Klarna laid off 700 employees. By early 2025, CEO Sebastian Siemiatkowski announced a new AI chatbot, developed with OpenAI, was handling two-thirds of customer support queries — supposedly with 25% higher satisfaction scores.

The media lapped it up. But beneath the headlines, Klarna admitted it was rehiring human agents. The AI couldn’t handle nuance. It frustrated users. And behind the scenes, support quality was slipping. (The Economic Times)

AI didn’t take 700 jobs. Corporate leadership did — with AI as cover.

Publishing: Replacing Writers With Word Salad

In 2023–2024, outlets like CNET, BuzzFeed, and Men’s Journal began quietly publishing AI-generated content.

- CNET had to pause its bot-written finance stories after factual errors were exposed. (Futurism)

- Men’s Journal ran health advice from ChatGPT-like tools — full of dangerous inaccuracies. (Futurism)

These weren’t tools to support journalists — they were replacements. The result? Worse information. Lost trust. Jobs cut. All in the name of “innovation.”

Art and Illustration: Imitation Without Compensation

Artists have been hit hard by AI image generators — especially in concept art and fantasy illustration. Tools like Midjourney and Stable Diffusion can churn out stylised visuals trained on the scraped work of real, unpaid creatives.

In early 2024, Wizards of the Coast was caught using what appeared to be AI-generated promotional art for Magic: The Gathering. Fans noticed distorted anatomy and inconsistent lighting — dead giveaways of AI. (Polygon)

WOTC eventually admitted some use of generative tools, but backlash was fierce. Artists and fans accused them of sacrificing integrity and craft for speed and savings.

More broadly, artists have sued companies like Stability AI over copyright violations. (Ars Technica)

Customer Service: Frustration on a Loop

AI chatbots are now a staple across telecoms, banks, and online retailers. They’re meant to streamline support — but in reality, they often fail to resolve anything.

A Bloomberg study in 2023 found that AI-driven customer service makes people more frustrated than human agents, especially when systems fail to recognise tone or context. (Bloomberg)

Once again, this isn’t about serving customers better. It’s about reducing headcount — and hoping customers don’t mind.

Retail: Self-Checkout ≠ Progress

Walk into any supermarket today and you’ll find more kiosks than cashiers. But despite the narrative of “streamlined shopping,” retailers are quietly reversing course.

- Walmart began removing self-checkouts in response to theft and customer dissatisfaction. (Newsweek)

- Forbes reported rising shrinkage losses — partly due to “frictionless” systems failing to prevent missed scans. (CBS News)

Replacing workers with kiosks didn’t improve the experience. It offloaded labour onto customers — and created more problems than it solved.

Healthcare: Automating Compassion?

AI in healthcare sounds promising — predictive diagnostics, automated scheduling, triage bots. But real-world trials reveal cracks.

In the UK, NHS Trusts have trialled AI-powered tools like Limbic Access, a chatbot used to streamline mental health referrals. While these tools can help with efficiency and early access, concerns remain about their ability to understand complex emotional distress. Experts have warned that chatbots often miss subtle cues and can provide overly simplistic or inappropriate responses. (NHS England, Oxford Health NHS)

The British Medical Journal has also warned about overreliance on AI triage tools, highlighting patient safety risks when nuanced symptoms are reduced to keyword matching. (BMJ)

Healthcare is high-stakes. AI may support delivery — but it isn’t ready, and may never be able, to fully replace empathy and clinical judgment.

Education: Ghost Teachers, Ghost Answers

Edtech platforms now boast AI lesson planning, grading, and even classroom assistants. Some are helpful. But others erode the role of the teacher altogether.

AI is increasingly embedded in education platforms like Khan Academy and Quizlet, offering automated responses, feedback, and study guidance. But educators are sounding the alarm: these tools may hinder students’ ability to develop reasoning, ask good questions, and write with original thought.

In a recent Washington Post roundtable, columnist Molly Roberts warned that when students rely on AI to generate ideas or papers, it’s not just cheating — it’s stunting the formation of civic and cognitive muscles that schools are meant to develop. (Washington Post)

Meanwhile, AI tutors and writing assistants continue to expand in schools — often with limited oversight. Without strong pedagogical guidance, these tools risk reinforcing bias, oversimplifying concepts, and replacing human connection with templated answers.

Schools that adopt AI too aggressively risk dehumanising education — reducing it to prompts, completions, and data dashboards.

What’s Actually Being Optimised?

When a company replaces support staff with a bot, an illustrator with a generator, or a teacher with a template — what’s really being optimised?

- Not empathy.

- Not accuracy.

- Not user experience.

It’s labour cost.

It’s shareholder returns.

AI doesn’t force these decisions. It enables them. Executives make the call. Workers pay the price.

The Corporate Cloak of “Innovation”

Innovation should mean doing things better. But in practice, AI is often used to do things cheaper — even if they get worse.

The word “innovation” becomes a shield. It lets companies offload accountability. “The bot made the mistake.” “The model just wasn’t trained on that case.” “This is the way the world is going.”

But that’s a lie. Every AI deployment is a human choice.

What About the Workers?

What happens to the people who used to do those jobs — with skill, care, and nuance?

They’re told to “upskill.” To learn how to supervise the bot that replaced them. To be more “strategic.” But often, the new roles never materialise. The ladder collapses under the guise of disruption.

And those who remain? They’re asked to do more with less, now responsible for cleaning up the bot’s messes, while contending with lower pay, fewer colleagues, and vague promises about future-proofing.

Reclaiming the Narrative

This isn’t about being anti-tech. It’s about being pro-context, pro-labour, and pro-accountability.

When people speak out — things change:

- Klarna began rehiring.

- Wizards of the Coast reclarified its AI policy.

- CNET paused AI content.

- Schools are reassessing classroom bots.

Pushback works. The myth begins to crumble when we stop accepting inevitability as an excuse.

Reflection

We don’t have to break machines — but we do need to stop believing they make these choices. They don’t. People do.

And when we hold the right people accountable — not the tools — we can begin to shift the conversation. The future of work doesn’t have to be soulless, de-skilled, or stripped of dignity.

It can be collaborative. Ethical. Human.

But only if we make it so.